(context, content warning: dangerously furry)

I’ve been messing around with the StableDiffusion approach for a month or so, now. I do share some concerns (eg, access into the fandom for new artists that don’t use these tools) and even have different ones (eg, AI-assisted ‘tracing’, disruption of social networks for artists). But I also think some of the limitations are not-obvious unless you’ve used the software, are likely to remain for at least a good few years, and combined with the social responses, are going to prevent at least some of the worst-case issues.

People have already built specialized AI art generators for furry stuff, specifically. They’re not very well-trained -- Hasuwoof’s fine-tune accidentally dropped almost all species information from the training, and surprised him by working anyway -- but they do create some furry art reasonably well.

(yiffy-epoch18 model, prompt: anthro, detailed fluffy fur, impasto impressionism, clothed.)

And there are some styles and components they do okay, even if there’s obvious room for improvement:

(yiffy-epoch18 model, prompt: uploaded on e621, safe content, solo, anthro, ((lion)), astronaut, mane, smiling, outer space, space station, rocket, male, high, by Michael & Inessa Garmash, Ruan Jia, Pino Daeni)

To the ‘not bad for your first DeviantArt submission’ range:

(yiffy-epoch18 model, prompt: trending on artstation, e621, cyberpunk wolf (((in frame))), ((by kenket, photonoko)), muzzle gray fur [mane] ((tail)), ((detailed glossy texture)),high resolution, extremely high quality digital art,punk clothes,[futuristic],cybernetic, detailed [city] dangerous area background, realistic professional photograph, [sunglasses goggles],[neon lights], ((full body photo)))

But there’s a bit of an emphasis on some there. It’s reasonably good at profile pics, and moderately good at single-character pinups. You can kinda kitbash it into having two characters interacting with each other (whether action, ‘action’, or slice-of-life) if you’re willing to fight it for long enough. Having multiple perspective views on the same character is essentially impossible; having it understand that a prompt wants some character descriptions applied to only one character, and other character descriptions applied to only another... the tooling doesn’t exist.

It can look good, ish, if achieving that in a random way. It’s can’t really look good in a specific way.

It doesn’t do text, or text-like symbols; even ‘simple’ logos like the NASA symbol get munged, and forget something complicated. You’ll notice those munged signatures in the examples above? These aren’t clones or copies of original signatures being merged; it’s the neural network ‘knowing’ that there’s supposed to be some sort of high-density linework in corners. It does the same to the Patreon logo: it ‘knows’ certain artists have an orange circle with a symbol inside, but it doesn’t know what letter that is, or whether non-patreon artists use it.

This isn’t going to replace ref sheets, and indeed, there’s some pretty significant limitations to the current approaches, some of which will take serious advances in the whole field before it could even get close. Likewise, more specific anatomy ranges from mediocre (horns) to nonsensical (hands, feet/paws, eyes).

The tokenizer doesn’t really ‘understand’ how human speech works and there’s no obvious changes that would get to there from here, leading to often bizarre side effects to common prompts. Trying to get rainbow-feathered birds also gets a rainbow in the background more often than not. It’s not just that your original character Donut Steel will have to tone down contrasts; it’s that trying to give a single character in a scene glasses will usually put them on everybody and filled with drinks on the table, if you’re lucky.

((somewhat ironically, these means same-sex couples are easier than het ones, if only because there’s less guessing or at least fewer problems about figuring what bits go where.))

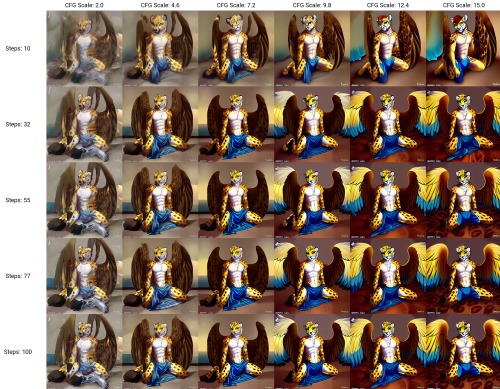

If you try to give a cheetah rosettes, it might not know when to stop:

(yiffy-epoch18 model, prompt: by garnetto and ruiadri and snowskau, solo, anthro, male cheetah, blue feathery wings, intricate detail, fluffy fur, clothed)

((not very good facial anatomy, but also note the ‘signatures’ are more fake than normal here: of these three artists, only garnetto regularly signs their work, and they do so with a single angular- and thick-text CHERRYBOX.))

That’s not specific to furry art -- someone put a challenge up to make a picture of a pendant of metal shaped like a moth, with some rust, and the vast majority of submissions ended up with blue ‘glass’ for some reason -- but for obvious reasons this is going to be more frustrating for character design. And anything you’re not specific about can end up filled in randomly.

In that sense, it’s an interesting toy. I’m sure that there are some people who will try to use purely prompt-generated images as their sole or primary interaction with the furry fandom, and I expect that the FurAffinity/DeviantArt bans on AI-generated art will cause some drama (and people trying to sneak AI-gen’d art through will cause much more), but without readily available specificity, I’m not sure that most commissioned or even requested art with move that direction. It’s not just that the tool would struggle make a bad comic, even with the assistance of a great artist driving it; it’s that it would struggle to make a sticker pack on its own. Or if you tell it to do knots and shibari, you’re gonna have a bad time.

But more interestingly, a lot of these weakness are things that artists can shore up, and smaller-name artists are more likely to benefit from shoring up, in ways that help the smaller-name artists on their skills (or at least avoiding wrist-breaking tedium: cfe rosettes and feathers above).

One of the less well-covered components to StableDiffusion (and its branches) is the ‘img2img’ capability, where it tries to introduce and remove noise from (optionally, parts of) an introduced image to more closely match a prompt.

(’traditional’ digital media by mantiruu)

(img2img output, unknown prompt, unknown model)

((There are better examples, but I’m not comfortable putting someone else’s work through img2img without permission.))

Now, that’s not ideal -- the artist themself was more interested than happy with it. But they also seemed to be trying to find ways to just get the fur texturing in without the broader modifications, and it should be possible. Non-furry artists have had reasonable success at drawing augmentation of this scope, albeit with some limits, and this gives a lot of control of important capabilities like composition, character design, and layout back to the artist. And it’s not hard to think of more general uses for that sort of capability.

In many senses, this is a lot less interesting -- many of these tricks are things you could do in conventional digital art tools with the right brushes or filters. And a lot of artists are going to prefer traditional digital or physical tools instead even if img2img worked a lot better than it currently does. At the very least, there’s a lot more documentation on how to use watercolors or SAI. Even for those who do want to use this tool, working with it is part and parcel with understanding the changes it’s making, often in ways that eventually make it easier to do in ‘traditional’ ways.

But I think another plausible future has a lot of ‘AI’ art that’s mostly got an artist in the driver’s seat, and where it’s more relevant for artists who want to understand how to improve their own art and style rather than for those at the top of their game. There’ll be a handful of people using txt2img at length, but few (if any) will be doing it as a full workflow for commissions, a larger number will be nerds doing it just to see what they can manage, and more will be doing so to avoid commissioning a new character’s first ref pic “and they’re a green wolf with blue hair and a leather jacket” and three hundred revision requests.

This still has a lot of potential for drama; img2img can be used as easily as PhotoShop to ‘trace’ a source in exploitative ways, inpainting to change a character’s gender is going to be a problem when done with out permission, for now it’s hard to run without a >6GB VRAM graphics card that’s out of scope for many artists.

There probably will be some economic impacts on artists -- there’s a lot of ‘drawovers’ you can’t do with StableDiffusion, but there’s at least a few that you can, and drawover commissioners tend to be less picky anyway, for a trivial example. Hosting sites are going to have to make a decision on how to handle it, and it’s not unreasonable for some (or even most) to ban it lest traditional artists have difficulty dealing with what is often just spam.

And I do share your concerns that, just as digital art colorization and shading made entry to the field more intimidating to new or younger artists, the output of AI-assisted art may do the same, even and perhaps especially if it doesn’t actually chop out the lower-end for existing artists. And over long time-scales, I’m a lot less confident that the limitations of pure AI generation (though this is probably decade+ before it’s accessible to home users, even if the tool itself came out tomorrow).

But I’m less apocalyptic about it than I was before trying the stuff out.